[ad_1]

Virtual reality (VR) applications are growing rapidly across sectors as diverse as entertainment, professional services and even education.

VR technology is now being extended in an innovative way to the automotive industry by London based independent creative design studio, NewTerritory.

NewTerritory has created a multi-sensory virtual reality experience which has been designed to assist the automotive industry and mitigate some of the challenges presented by semi-autonomous driving.

Using the latest in VR technology the company enables the test driver to experience a fully immersive experience, allowing the company to monitor heart rate, reactions to various stimulus and much more.

We visited the NewTerritory office in London to speak with design and creative tech director Tim Smith, to find out more about this unique technology.

Just Auto (JA): How did you come to your role at NewTerritory?

Tim Smith (TS): My background is in automotive technology. I’ve worked with big brands such as Ford, Volkswagen and Porsche, but also companies like Google and Apple for the last ten years.

I was always in digital and creative technology, and one of the things I started to find more recently in my career was that there was always a physical component that was necessary to complete the experience.

When I joined NewTerritory you had kind of the opposite problem where they were already designing a lot the physicality of some of these in-car or transportation experiences, but they came to the point where they needed some digital and creative technology. I was hired to bolster that side.

It feels like we can provide a complete package and for me personally in my career I can see through complete projects and make bigger differences to customers.

How did this project start?

TS: The first thing I did when I started was persuade the team, but it wasn’t difficult, they were very keen for this project. We said let’s create a project that demonstrates the power of merging the physical with the digital. The Metaverse was coming up a lot in discussion and I saw the Metaverse as an amazing testbed for testing experiences safely.

We can’t test this technology in a real car or on real roads, the laws won’t allow it, but in the Metaverse, in a virtual simulated world, we can freely test a number of experiences.

I’ve always thought the car is the only consumer electronic product that you can step into and it’s something that can traverse you through space and time. In the last few years we’ve seen the likes of Apple and Google take some of the automotive industry’s lunch, and by that I mean carsharing or even creating their own cars. There’s an opportunity here for the automotive industry to win some of that lunch back.

For me there’s no more compelling application of voice assistance than in the car; I think it’s Rolls Royce who have ‘Elena’ which is its own AI system. Other car companies have similar things. The car is an ideal application for voice assistants, and it may well be that people trust, say, ‘Elena’ more than they trust ‘Siri’ in time.

What this prototype does is create a multi-sensory experience that could curate people’s moods and their cognitive ability.

I also thought the automotive industry should stop looking at the centre console. There’s a whole vehicle in a controlled environment that can in theory, read your heart rate, see how quickly you’re blinking, what your pupil dilation is, how fast you’re breathing, and all these things can infer not just things like how tired you are, but how excitable you might be or how susceptible you might be to certain information.

What this prototype does is create a multi-sensory experience that could curate people’s moods and their cognitive ability.

What is the ‘cognition Goldilocks Zone’?

TS: I worked with University College London as a guest lecturer a couple of years ago. We were thinking about this problem around level 4 autonomy. At the time, and I’m not sure if it’s still the case, but Google and Ford had decided that they were going to completely skip level 4 because it was too difficult. Not from a technological point of view perhaps, but from the human perspective. Level 4 is pretty much fully autonomous, a robocar. Beneath that level, it’s not fully autonomous drive because there will be points in which the driver has to take over – there is still human responsibility for part of the journey.

If you imagine that the car was asking you to take over 70mph after you’ve read a book, your situational awareness is completely in the gutter and it’s difficult to take over control at that speed.

So, we were trying to understand what the cognitive load was like at that stage; it’s as if that muscle isn’t warmed up enough to be able to cognitively have the situational awareness to take over the drive. Once you know when you’re going from one context such as reading a book to driving, it’s a completely different cognitive load.

We did a number of tests around how people performed depending on different cognitive stresses.

There was one test where we asked someone to watch an episode of the TV show ‘Friends’ on an iPad, and then we would give them the trigger to take over – we noticed that the performance for driving was terrible.

However, another interesting thing was when they were what we call ‘over-stimulated’; so there were too many billboards on the side of the road, there was music playing, the window was down, there was noise and hazards on the road. They were overstimulated by the drive and that made them equally dangerous.

We realised that you can be under-stimulated, and you can be over-stimulated, so there must be a cognition ‘Goldilocks Zone’ – an optimal level of stimulation. What we found is that we were able to do some interventions, to bump them up or down into the cognition Goldilocks Zone, and then the driving performance afterwards really improved.

The way we’re testing at the moment is very rudimentary, but it’s with heart rate so we can know what their average heart rate is. We know that if it’s a certain percentage below their average heart rate, they’re under-stimulated, if it’s a certain percentage above then they’re over-stimulated.

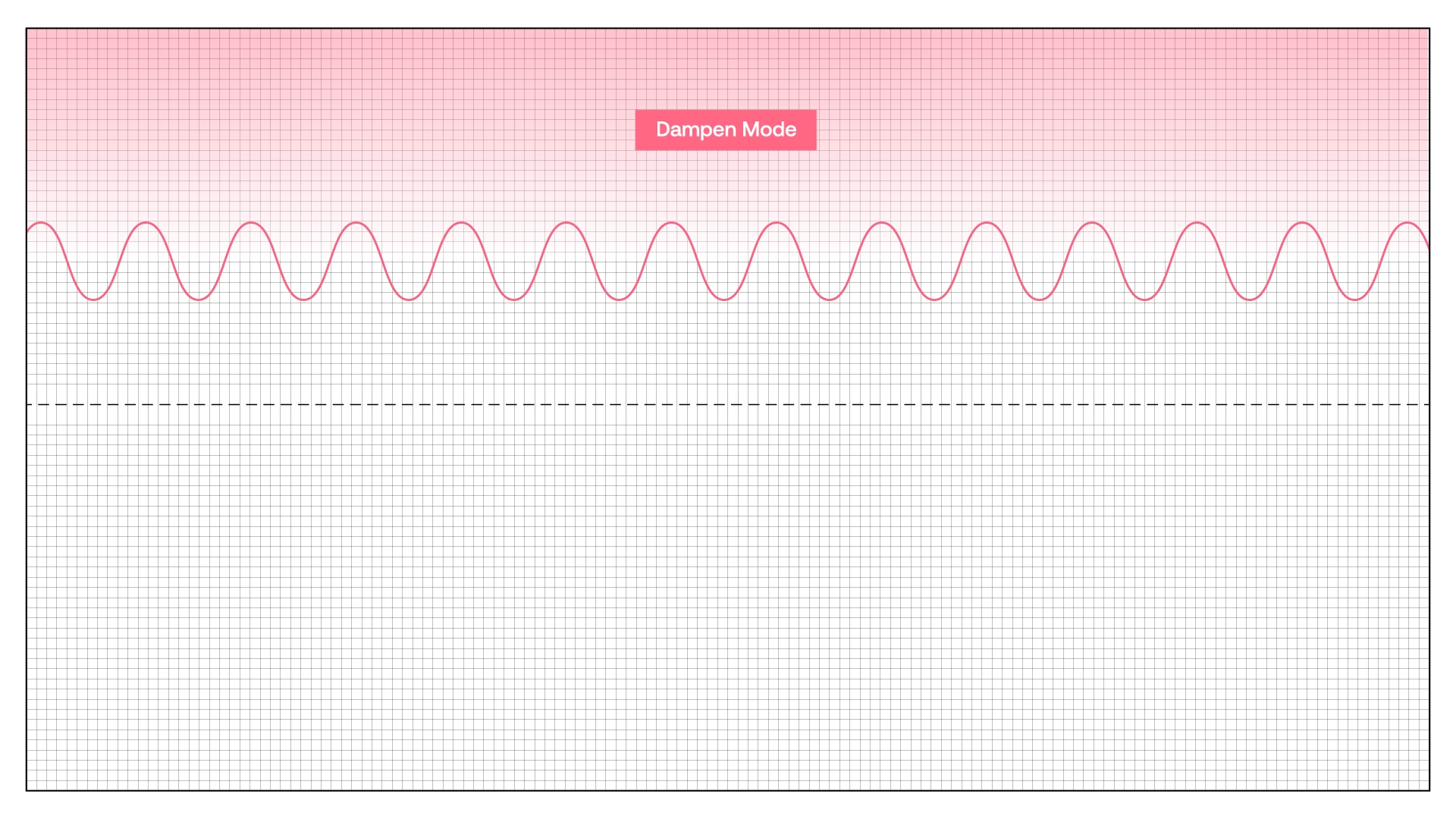

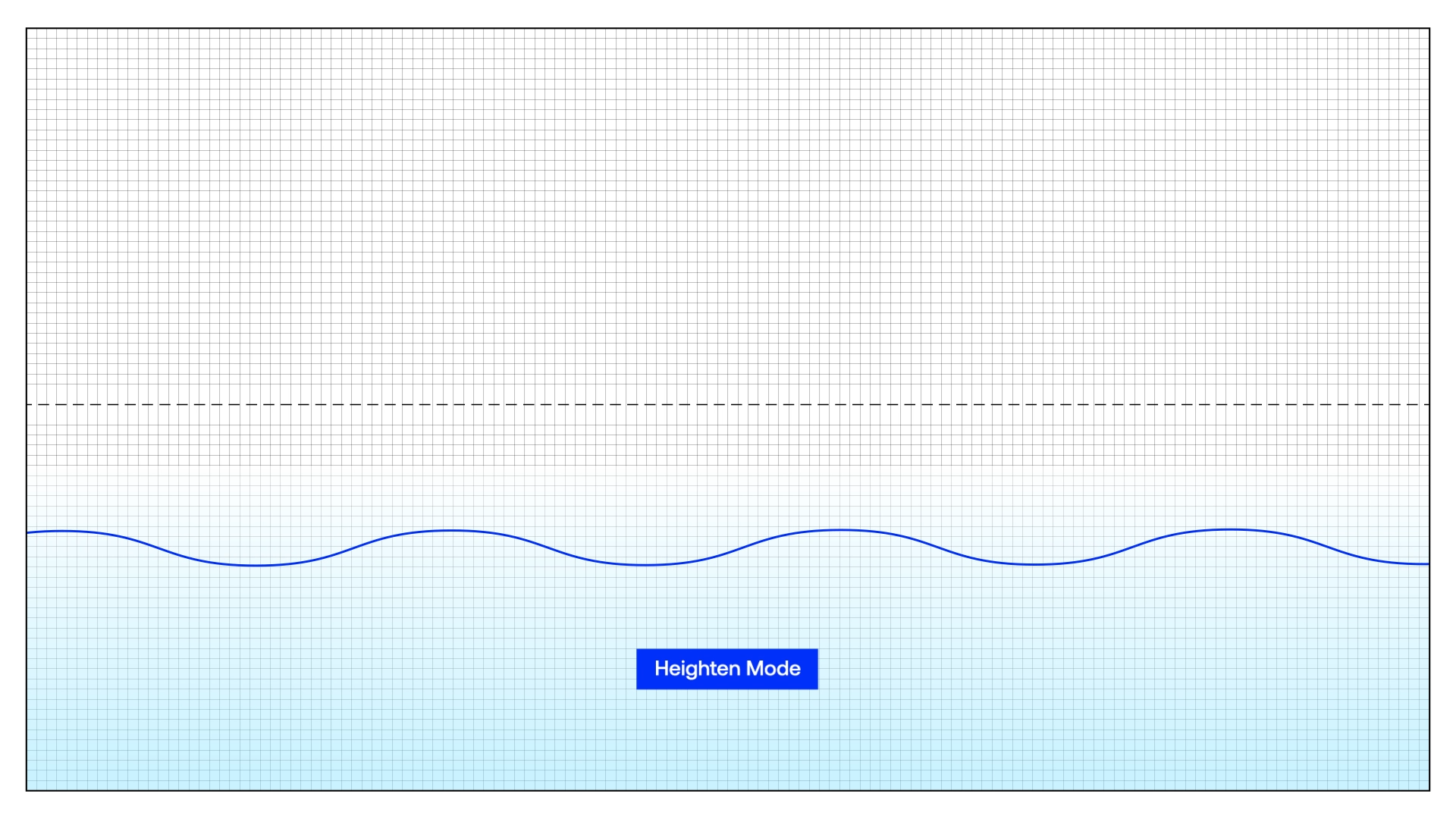

That’s what triggers what we call the dampen mode or the heighten mode. It’s different for each person. In practice the car will get to know your resting heart rate after a few drives so if it goes above or below, the technology knows when to kick in.

For our readers who can’t see the set-up like I can, can you explain the technology that you have here?

TS: The first thing I will start with is the control panel that’s browser based and is used to talk to several devices, physical devices. This is so we can see how it’s performing.

Then we have this whole rig, if you take the car seats away this could be a gaming experience, or a retail experience. But for this prototype, we are just testing it for the semi-autonomous context.

We’ve got two very authentic car seats (which are made from some MDF stuck onto some office chairs), we have the rig itself which is a skeleton by which we can hang a number of pieces of technology on, so over time, we can change things which may not work as well, or we want to add to.

At the top of the rig, we have got these watering dispensers, one is associated with heighten mode and one is associated with dampen mode. In the dampen mode bottle we have yuzu, which is good for focus, in heighten mode we have citrus which helps with making people more alert.

We really are multi-sensory, so we also consider taste; what we do is give people a lollipop to put in their mouth depending on if it was the dampen mode or heighten mode. That comes from the idea that when people suck mints it helps their focus while driving.

Taste is a very difficult sense to design for, as it’s a tactile sense that requires initiation from the person, usually with their hands, which should be on the wheel. A tin of mints on the dashboard was how my granddad kept himself focussed and entertained while driving long distances – perhaps a brand needs to come along and introduce specialist driver sweets with fan mounting packaging. This is a tough one…

Then we have what we call a multi-directional fan system, it’s basically a main fan which simulates the air conditioning in the car, then we have a side fan which simulates the open car window.

In the seat here, we’ve got the muscle massager and another one in the headrest. They create what we’re calling HD haptic feedback, it’s a bit like the Nintendo Switch’s HD Rumble where it’s not just be on or off it can render various strengths and direction of vibration and haptic feedback. We use them to either mimic different road conditions or we use it to jolt them awake, or alert them to something.

Then we have this glove, which we call the glove of truth, you wear this glove of truth, it reads your heart rate. What it does in the first minute is it takes your average heart rate, then once the experience begins it can see if it’s dipping, or peaking, and that’s when it triggers the heighten or dampen mode.

We have a small section for temperature, this is just a heater which you can turn on and off. Then the most technical element is the VR headset, this is the latest VIVE headset, this is able to give you an audio and visual sense of what’s going on.

In three to four years’ time what did you see the status of this technology being?

TS: All of this hardware probably already exists in cars in some form, but they don’t work in harmony. They don’t work together for this purpose – all it requires is a bit of clever code that connects the hardware.

I think it’s more this mode of thinking than the physicality of what you see here that I think will hopefully be adopted into the car.

Accessibility is a key area of focus for me. When it comes to multi-sensory experiences, I’m hoping that becomes the norm for people with different needs and disabilities.

I think if we consider experiences more on this multi-sensory level, you make sure that you’ve thought of every possible stimulus that each sense can consume. Not only do you make it a more immersive experience, and a better brand experience, but you actually make it more accessible as well.

I’ve actually done lots of testing with blind people; some of them own cars but can’t drive them, and the thing they always say is: “I just want to sit in the driver’s seat.” With driverless cars, that’s a possibility in theory.

I think the beauty of this prototype is that it’s multi-sensory, I think it’s better for brands, because they can give much more immersive brand experiences, it’s better for the everyday consumer, because they can have more pleasant experiences. Then it’s better for accessibility, and therefore increases the size of the market as there are now more people who can appreciate it.

[ad_2]

Source link

/cdn.vox-cdn.com/uploads/chorus_image/image/62810996/Amm_DeepSentinel_01.0.jpg)

More Stories

Lookers Volkswagen Preston wins Motability Awards

Most small crossovers fail to protect rear passengers in new IIHS crash test

10 Best Christmas Vacations in the World